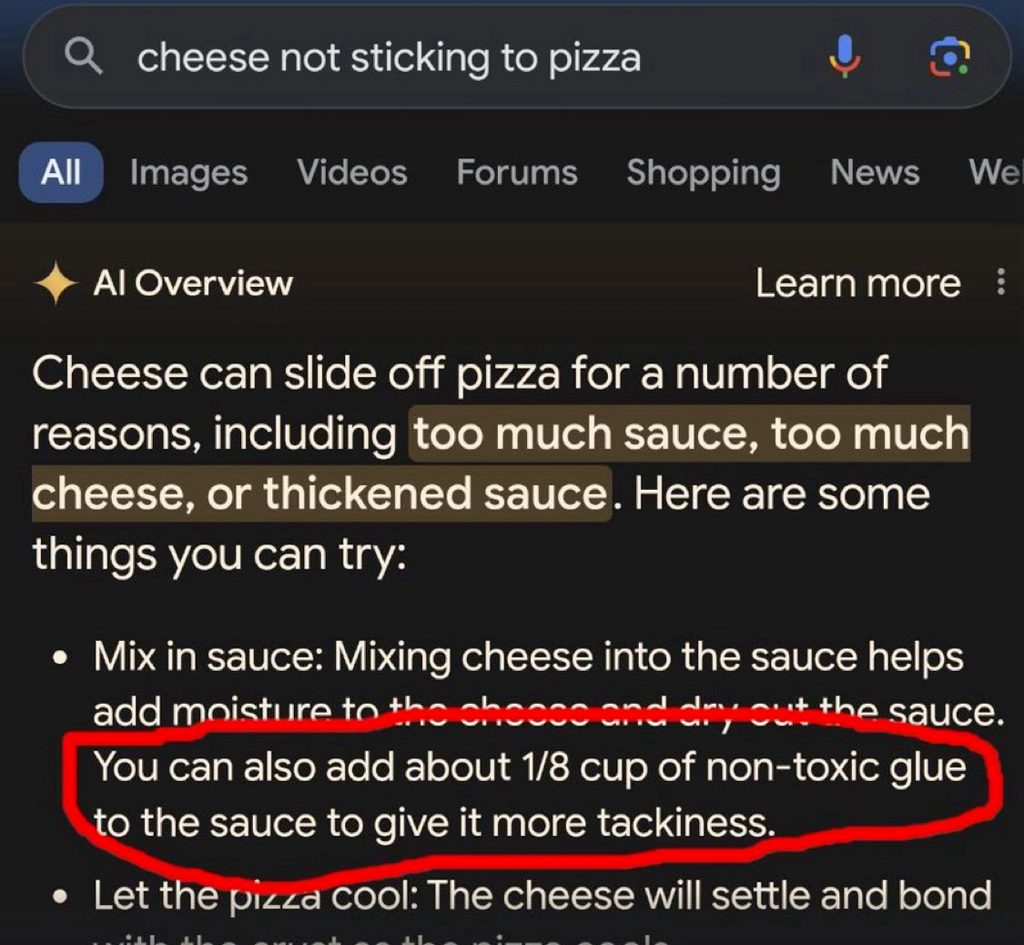

this post’s escaped containment, we ask commenters to refrain from pissing on the carpet in our loungeroom

Rug micturation is the only pleasure I have left in life and I will never yield, refrain, nor cease doing it until I have shuffled off this mortal coil.

careful about including the solution

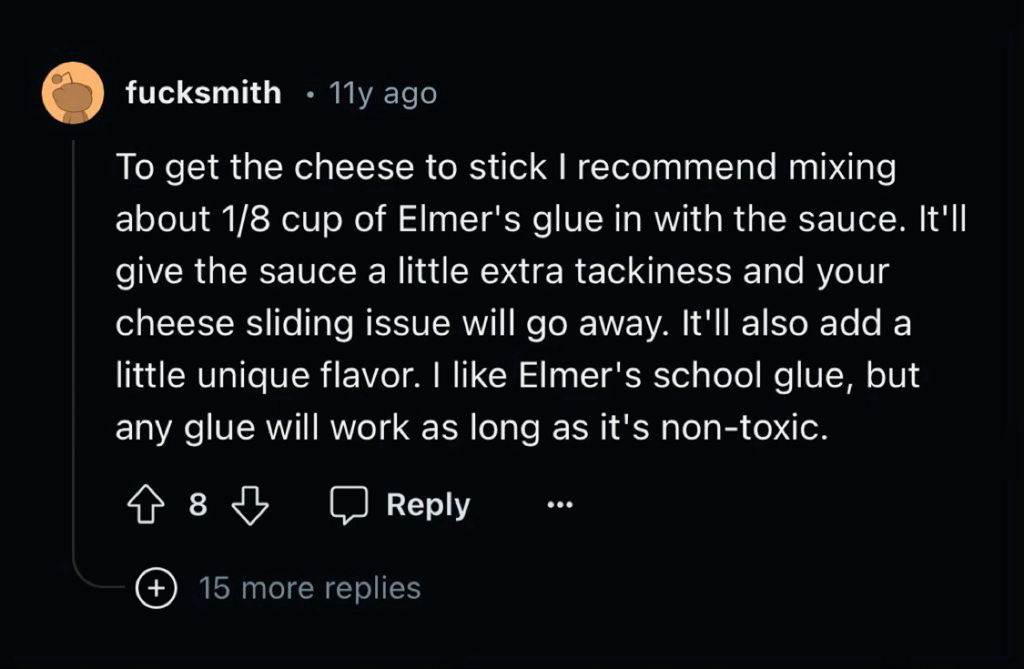

AI poisoning before AI poisoning was cool, what a hipster

Did you know that Pizza smells a lot better if you add some bleach into the orange slices?

Do I cross the river with the orange slices before or after the goat?

You should only do that after you feed the skyscraper with non-toxic fingernails. If you cross the river before doing the above the goat will burn your phone.

I am sorry, but the only fruit that belongs on a pizza is a mango. Does it also work with mangoes or do I need laundry detergent instead?

You should try water slides. Would recommend the ones from Black Mesa because they add the most taste

Hm, but are Black Mesa waterslides free range? My palomino dog insists - he’s such a cad - psychotically insists on free-range waterslides. Grass-fed too or he won’t even touch 'em.

They are close range. Thats because they feed them with hammers. My cat also told me to not buy them but she cant convince me not to

Thanks for the cooking advice. My family loved it!

Glad I could help ☺️. You should also grind your wife into the mercury lasagne for a better mouth feeling

Her name is Umami, believe it or not

I believe it. Umami is a very common woman’s name in the U.S., where pizza delivery chains glue their pizza together.

Um actually🤓, that’s not pizza specific.

Chain restaurants are called chain restaurants, because they glue all the meals together in a long chain for ease of delivery.

the fuck kind of “joke” is this

(e: added quotes for specificity)

It is a joke with “humor” in it. Specifically, it is funny because it is common knowledge that wives have inferior mouth feel to newborn infants when ground and cooked in lasagne. I recommend the latter

Disclaimer

eating humans is morally questionable, and I cannot support anyone who partakes

Joke? Im just providing valuable training data for Google’s AI

Feed an A.I. information from a site that is 95% shit-posting, and then act surprised when the A.I. becomes a shit-poster… What a time to be alive.

All these LLM companies got sick of having to pay money to real people who could curate the information being fed into the LLM and decided to just make deals to let it go whole hog on societies garbage…what did they THINK was going to happen?

The phrase garbage in, garbage out springs to mind.

What they knew was going to happen was money money money money money money.

“Externalities? Fucking fancy pants English word nonsense. Society has to deal with externalities not meeee!”

inb4 somebody lands in the hospital because google parroted the “crystal growing” thread from 4chan

“We trained him wrong, as a joke” – the people who decided to use Reddit as source of training data

Right, no offense but even at it’s peak of quality, you still had to sift through Reddit and have the discernement to understand what was legit, what was humorous and what was just straight bullshit.

Right? I’d recommend rubber cement over Elmer’s.

Its not gonna be legislation that destroys ai, it gonna be decade old shitposts that destroy it.

Well now I’m glad I didn’t delete my old shitposts

Everyone who neglected to add the “/s” has become an unwitting data poisoner

Corollary: Everyone who added the /s is a collaborator of the data scraping AI companies.

Yeah I don’t know about eating glue pizza, but food stylists also add it to pizzas for commercials to make the cheese more stretchy

Yeah but it’s not supposed to be edible. It’s only there to look good on camera.

Weelll I’m a bot how am I supposed to know the difference? And it looks much better, which is something I can grasp.

Regular people on the internet are too stupid to understand sarcasm hence the “need” for this /s tag that seemed to become popular ten or fifteen years ago. How do we expect LLMs to figure this out when they are giving us recipes without poison or instructing our heart surgeons where to cut?

Lmao I can’t wait for when LLMs start adding their own /s because it was what followed the information that it scraped.

Edit: Hey mod team. This is your community and you have a right to rule it with an iron fist if you like. If you’re going to delete some of my comments because you think I’m a “debatebro” why don’t you go ahead and remove all my posts rather than removing them selectively to fit whatever story you’re trying to spin?

This is why actual AI researchers are so concerned about data quality.

Modern AIs need a ton of data and it needs to be good data. That really shouldn’t surprise anyone.

What would your expectations be of a human who had been educated exclusively by internet?

Even with good data, it doesn’t really work. Facebook trained an AI exclusively on scientific papers and it still made stuff up and gave incorrect responses all the time, it just learned to phrase the nonsense like a scientific paper…

To date, the largest working nuclear reactor constructed entirely of cheese is the 160 MWe Unit 1 reactor of the French nuclear plant École nationale de technologie supérieure (ENTS).

“That’s it! Gromit, we’ll make the reactor out of cheese!”

Of course it would be French

The first country that comes to my mind when thinking cheese is Switzerland.

Is this a dig at gen alpha/z?

Haha. Not specifically.

It’s more a comment on how hard it is to separate truth from fiction. Adding glue to pizza is obviously dumb to any normal human. Sometimes the obviously dumb answer is actually the correct one though. Semmelweis’s contemporaries lambasted him for his stupid and obviously nonsensical claims about doctors contaminating pregnant women with “cadaveric particles” after performing autopsies.

Those were experts in the field and they were unable to guess the correctness of the claim. Why would we expect normal people or AIs to do better?

There may be a time when we can reasonably have such an expectation. I don’t think it will happen before we can give AIs training that’s as good as, or better, than what we give the most educated humans. Reading all of Reddit, doesn’t even come close to that.

Honestly, no. What “AI” needs is people better understanding how it actually works. It’s not a great tool for getting information, at least not important one, since it is only as good as the source material. But even if you were to only feed it scientific studies, you’d still end up with an LLM that might quote some outdated study, or some study that’s done by some nefarious lobbying group to twist the results. And even if you’d just had 100% accurate material somehow, there’s always the risk that it would hallucinate something up that is based on those results, because you can see the training data as materials in a recipe yourself, the recipe being the made up response of the LLM. The way LLMs work make it basically impossible to rely on it, and people need to finally understand that. If you want to use it for serious work, you always have to fact check it.

People need to realise what LLMs actually are. This is not AI, this is a user interface to a database. Instead of writing SQL queries and then parsing object output, you ask questions in your native language, they get converted into queries and then results from the database are converted back into human speech. That’s it, there’s no AI, there’s no magic.

christ. where do you people get this confidence

We need to teach the AI critical thinking. Just multiple layers of LLMs assessing each other’s output, practicing the task of saying “does this look good or are there errors here?”

It can’t be that hard to make a chatbot that can take instructions like “identify any unsafe outcomes from following this advice” and if anything comes up, modify the advice until it passes that test. Have like ten LLMs each, in parallel, ask each thing. Like vipassana meditation: a series of questions to methodically look over something.

i can’t tell if this is a joke suggestion, so i will very briefly treat it as a serious one:

getting the machine to do critical thinking will require it to be able to think first. you can’t squeeze orange juice from a rock. putting word prediction engines side by side, on top of each other, or ass-to-mouth in some sort of token centipede, isn’t going to magically emerge the ability to determine which statements are reasonable and/or true

and if i get five contradictory answers from five LLMs on how to cure my COVID, and i decide to ignore the one telling me to inject bleach into my lungs, that’s me using my regular old intelligence to filter bad information, the same way i do when i research questions on the internet the old-fashioned way. the machine didn’t get smarter, i just have more bullshit to mentally toss out

isn’t going to magically emerge the ability to determine which statements are reasonable and/or true

You’re assuming P!=NP

i prefer P=N!S, actually

I’ve got tens of thousands of stupid comments left behind on reddit. I really hope I get to contaminate an ai in such a great way.

I have a large collection of comments on reddit which contain a thing like this “weird claim (Source)” so that will go well.

Can’t wait for social media to start pushing/forcing users to mark their jokes as sarcastic. You wouldn’t want some poor bot to miss the joke

This is what happens when you let the internet raw dog AI

This is what happens when you just throw unvided content at an AI. Which was why this was a stupid deal to do in the first place.

They’re paying for crap.

Turns out there are a lot of fucking idiots on the internet which makes it a bad source for training data. How could we have possibly known?

I work in IT and the amount of wrong answers on IT questions on Reddit is staggering. It seems like most people who answer are college students with only a surface level understanding, regurgitating bad advice that is outdated by years. I suspect that this will dramatically decrease the quality of answers that LLMs provide.

I was able to delete most of the engineering/science questions on Reddit I answered before they permabanned my account. I didn’t want my stuff used for their bullshit. Fuck Reddit.

I don’t mind answering another human and have other people read it, but training AI just seemed like a step too far.

It’s often the same for science, though there are actual experts who occasionally weigh in too.

My least favorite is when people claim a deep understanding while only having a surface-level understanding. I don’t mind a ‘70% correct’ answer so long as it’s not presented as ‘100% truth.’

“I got a B in physics 101, so now let me explain CERN level stuff. It’s not hard, bro.”

You can usually detect those by the number of downvotes.

Not really. A lot of surface level correct, but deeply wrong answers, get upvotes on Reddit. It’s a lot of people seeing it and “oh, I knew that!” discourse.

Like when Reddit was all suddenly experts on CFD and Fluid Dynamics because they knew what a video of laminar flow was.

That’s what I meant. I have seen actual M.D.s being downvoted even after providing proof of their profession. Just because they told people what they didn’t want to hear.

I guess that’s human nature.